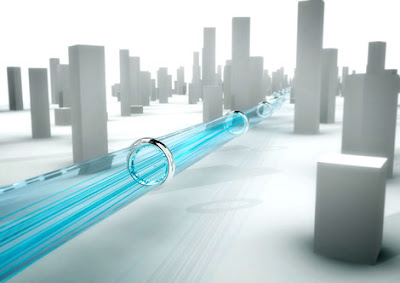

As the old adage goes, a picture is worth a thousand words. But when you're talking about a picture of the Internet, it's worth a whole lot more (about 100 trillion words according to Google's director of research, Peter Norvig). Creating a complete map of the Internet is a goal that has remained largely elusive because of the ever-growing, highly complex nature of the Net. Now, through the efforts of researchers in Israel—from Bar-Ilan University, in Ramat Gan, Hebrew University of Jerusalem, and Tel-Aviv University—the fuzzy image of the Internet has just gotten a bit clearer. The Israeli team recently published a paper in the Proceedings of the National Academy of Science in which they construct a new, more accurate picture of the Internet using a combination of graph-theory analysis and distributed computing.

A network like the Internet is composed of various nodes, or devices, such as computers, routers, PDAs, and so on, that are linked by one or more physical or virtual paths. To determine the structure of the Internet, researchers had to map out all these nodes and links and their relationships to one another. The main problem previous Internet cartographers faced was insufficient information. Data about network structure had been acquired through a limited number of observation points—computers using a software tool called traceroute to determine the path taken by data packets as they moved from source to destination through a network. The trouble was there were too few of these data points to generate a complete picture—a task comparable to a handful of people walking around and trying to map out the entire world.

In order to overcome this limitation, a team of researchers at Tel Aviv University headed by Yuval Shavitt developed the Distributed Internet Measurement and Simulation (DIMES) project. DIMES gathered network structure information through a technique known as distributed computing. Volunteers download an agent program that runs in the background onto their personal computers—in a similar fashion to SETI@home or Folding@home—gathering network data without interfering with system processes. This data, consisting of information related to 20 000 network nodes, is then processed by using a technique of graph theory called k-shell decomposition.

According to Shai Carmi of Bar-Ilan University, who performed much of the data analysis, k-shell decomposition breaks up the nodes in the network into nested hierarchical shells. It starts by finding all the nodes having only one connection. Those nodes are removed from the network and assigned the outermost shell, the 1-shell. Next, the nodes having two or fewer remaining connections are removed to the 2-shell. The process continues until all the nodes have been indexed. Using this technique, the team categorized the various shells as belonging to the network's crust, core, or nucleus—the best-connected nodes.

The nucleus consisted of more than one type of node. It included nodes like ATT Worldnet, having more than 2500 direct connections, and nodes like Google, which links to only 50 other nodes. The reason Google is in the nucleus is that it connects almost exclusively to the best-connected nodes in the Internet.

The size of the dots on the map identifies how many connections they make, while their k-shell index is indicated by their color and position.

IgniTech Logo

Vinoth

Thursday, February 19, 2009

A New Way of Looking at the Internet

Networking Know-How

Looking for a job? Even if you aren't, it's important to remember that the era of lifetime employment at a single company is over. Sooner or later, most of us are going to find ourselves looking for a new employer. Bearing that in mind, you need to make sure that your next job is a step up, not a stopgap, and one of the best ways to do this is by networking with others in your industry and related fields, even while you're happily employed.

Career-related professional organizations, such as the IEEE, are an ideal way to build networks. Attending local chapter events, or better still, getting involved with running a society, will make it more likely that when you send your job application to a business, you won't be a complete unknown.

"Engineers are mostly introverts, but eventually they're going to have to get their faces out of the computer," says Mark Mehler, coprincipal of CareerXroads, a recruiting technology consulting firm in Kendall Park, N.J. "Technology is not what's going to get you a job. You still have to call up and meet the contacts you make.

"The Internet and online social networks are great tools for gathering names and information" that can stand you in good stead, Mehler advises. He is referring to the latest trend in online job hunting and hiring: tapping social and professional networking sites. These sites typically allow you to post a profile, listing information about yourself as well as services and skills you have to offer. What's more, reciprocal connections can be made between the profiles of different users, allowing for friend-of-a-friend style introductions. This is known as a "six degrees of separation" model, named for the theory that states that every human being is connected by a chain of at most six people. The sites also enable people to forge long-term business relationships around outside interests and skill sets.

On these sites, job hunters gain access to top executives—as opposed to the human resource reps often listed as want-ad contacts—and personal relationships can lead to unadvertised openings. On the other side of the coin, recruiters trolling the Web site can seek out candidates instead of waiting for them to apply. Beyond jobs, these sites also allow users to secure venture capital and consulting work, advertise their services, research company culture, track the competition, and get advice.

"The idea is to start befriending recruiters and developing relationships with influential people well before you need them," says Krista Bradford, a two-year IEEE member who runs Bradford Executive Research LLC, a technology-focused recruitment and research firm in Westport, Conn.

As with any technology, there are ways to get the most out of your online networking. Following are a few tips:

Plan ahead. As noted above, networking sites are most effective before you need a job. "This is something you should be doing during the course of your career—especially if you don't normally get these types of [social] interactions," says Rob Leathern, director of marketing for LinkedIn, a networking site based in Palo Alto, Calif., that allows users to search for job listings offered by other site members. "The biggest mistake is leaving it to [only the times] when there are layoffs." When, earlier this year, enterprise application developer PeopleSoft Inc. merged with database maker Oracle Corp., in Redwood Shores, Calif., there was massive downsizing and "4000 of [PeopleSoft's] employees joined [LinkedIn] in 45 days," says Leathern.

It also may require testing several sites before finding the one that best suits your needs and interests. A site is "only worth as much as the cooperation of its participants," notes Bob Rosenbaum, director of Small Advantage, a nanotechnology marketing consultancy in Tel Aviv, Israel. "On the one hand, the Internet enables equal access to influential people who can really help," he says. But the equality of access "can actually be a drawback to finding quality connections. It's like online dating. People can say whatever they want about themselves."

Be selective. Not everyone listed on a person's connections list is necessarily someone they would endorse, and often people are added on a quid pro quo basis. Employers should note whom people are connected to in their networks and the seemingly ubiquitous names. "If someone is connected with real heavyweights or accomplished technologists, it gives me a sense they're running in the right crowd," says recruiter Bradford. "Other names I see attached to everybody, and I wonder how discriminating they are."

"Be careful who you let into your network," warns CareerXroads' Mehler. "Remember people want to reach your friends as much as you want to reach theirs." Users should also note site rules before joining, to see whether the site owners can claim access to your contacts for their own use and if they police their networks.

Be professional. Just as these networking sites can help applicants research a company, so can they help a company research you. "I won't always tell people I have a position open, because [then] they're more likely to let their hair down and show more of their true selves," says Keisha Richmond, president of Richmond Technology Solutions Inc., Deer Park, N.Y., an IT consulting company. She remembers "a lady who was always nasty to people whenever someone posted a differing opinion in an online discussion group. The owner of the discussion board finally told her not to post any more messages. Before this, I thought this might be someone I'd want to work with."

And despite privacy controls offered by some sites that limit what profile information is publicly readable, information can leak out. What makes someone a hoot at a party may not be perceived as the best endorsement of his or her professional skills—like the guy who lost a job when one employer noticed his full-body tattoos plastered on Tribe.net, a social and community networking site. "Be aware that if it's searchable in Google, it's fair game," says Bradford.

Be specific. Be as detailed as you can in the kind of contacts and job information you're seeking. When responding to job openings on a networking site, don't send generic information and leave it to the recruiter to figure out how you can work with a company. You've taken the trouble to seek someone out. They should at least know why you're there.

Site List

AlwaysOn-Network.com This site offers comments on tech industry investors and executives. It is especially good for jobs at venture-funded tech start-ups.

LinkedIn.com This is the preferred network for many professionals. It offers links and carries listings from DirectEmployers.com, a job database provided by a large consortium of U.S. corporations.

TheTeng.org The Technology Executives Networking Group is a networking and resources site for senior-level IT officers of start-ups, global Fortune 100 companies, nonprofits, and government agencies. It has chapters in 15 cities.

Careers.ieee.org Finally, don't forget the IEEE Job Site. Anybody can search its database of global job listings, read career-related articles, and see background information about top employers. IEEE members can also create profiles and get automatic notifications when matching jobs are posted by employers.

Could the Internet Fragment?

Logging onto the Internet is billed as a universal experience. Yet for years, below the radar of most people’s online experience, there have been sets of Internet domain names accessible to some people but not to everyone. Before the introduction of an official .biz domain, an independent .biz had existed for six years—and for a time the two survived in parallel, so that typing in a .biz address might send you to different sites, depending on which Internet service provider you had.

And now countries using non-Roman alphabets, notably China, are pressing for full domain names in their native scripts, prompting concern about whether the domains will be accessible to users of Roman alphabets. Will the proliferation of alternative domain names make the Internet more user-friendly and global, or will it accelerate a fracturing of the Internet that may have already begun?

The universality of the Internet lies in the domain name system, which translates between the names we humans understand, such as google.com and ieee.org, and the numerical addresses of the computers hosting those domains. To retrieve a URL or deliver an e-mail, a user’s computer needs the address of the relevant remote computer. Often a local server, known as a domain name server, will already know it. But when the local service doesn’t, there’s a defined way to find it out: start at the end of the address and work backward.

That final, or right-most, part of a domain name, such as .cn, .uk, .com, or .org, is called the root; a set of 13 root server operators maintains the authoritative list of servers for so-called top-level domains, Those top-level domain servers, in turn, can offer more specific address information for “google,” “ieee,” and the like. Once a request gets to the second-level domain, local servers for those domains can direct the original Web request or e-mail to the right Web or mail server.

“There shouldn’t be any kind of local name that works only in some places, from some ISPs,” says Paul Vixie, one of the designers of the system. Hence, a single body, the Internet Corporation for Assigned Names and Numbers (ICANN, in Marina del Rey, Calif.), is responsible for assigning top-level domains. The entire system is predicated on each domain’s being unique. There can be only one .biz, for example, for the same reason that a postal service can’t have, say, two different states or provinces represented by the same abbreviation.

And yet, for a while at least, there were two different .biz domains. Karl Denninger, a network consultant, created a .biz top-level domain in 1995. It was later administered by Atlantic Root Network Inc., based in Georgia. Then, in 2001, ICANN created its own .biz. Some ISPs had to make a choice: keep sending queries to the independent root servers used by Atlantic Root or switch to the official one. The root operators eventually gave up the old .biz domain, reverting to the ICANN-approved domain, which is run by NeuStar Inc., of Sterling, Va.

Fears of Internet fragmentation are largely based on concerns about alternative roots. But roots that try to tamper with existing top-level domains are likely to be marginalized—or worse—and therefore would not impinge much on universality, says Karl Auerbach, a former ICANN board member who runs his own top-level domain, .ewe. “If any root system operator were not to carry .com, [the operator] would simply lose visibility,” he says. If another .com were substituted, says Auerbach, it would likely be sued successfully for trademark infringement.

China and some Arab countries are testing domain names in their native scripts, raising touchy issues

Most roots system operators add new top-level domains to ICANN’s list. New.net Inc., an El Segundo, Calif., company, has deals with EarthLink and some other ISPs, giving their users access to top-level domains including .shop and .travel. A new entry to the field, Netherlands-based UnifiedRoot S&M BV, offers custom top-level domains.

Some roots server operators offer the same top-level domains, and if this trend ever became widespread enough to really matter, obviously the effect would be to confuse users and erode the Web’s universality.

But competing roots have never enjoyed a widespread following. “When was the last time somebody contacted you using a new.net name?” asks Milton Mueller, of Syracuse University’s School for Information Studies, in Syracuse, N.Y. If other new roots did become popular, the core top-level domains would likely remain universal, says Auerbach, but servers or users could select the extras they wanted. He says he once used a service called Grass Roots, now gone, which monitored all available roots and allowed operators to choose the top-level domains they wanted to serve.

China and some Arab countries have begun testing domain names in their native scripts. How to configure root servers to recognize such domain names is a touchy political issue, and as a result, countries could set up their own roots. Arab countries have tested domain names in Arabic by routing those queries to their own root servers, for example. But for now, the Chinese and Arab groups say they intend to maintain the officially recognized root, with only ICANN’s top-level domains.

Currently, the Chinese names can be translated into coded strings of ASCII characters, so they are intelligible as Roman script and thus available across the Internet, according to the China Internet Network Information Center, in Beijing. If China adopted its own roots, similar protocols could let servers translate between roots, says Mueller. Countries sharing alphabets would have to agree on how to register names, but China, Japan, and Korea have already published guidelines for their overlapping scripts. Coordinating several hundred roots, each offering domain names in a different language, would certainly be difficult, says Mueller, but “not as difficult as some Internet purists have led us to believe.

Bluetooth Cavities

As I'm sure most of you know, Bluetooth is a wireless networking standard that uses radio frequencies to set up a communications link between devices. The name comes from Harald Bluetooth, a 10th-century Danish king who united the provinces of Denmark under a single crown—just as Bluetooth, theoretically, will unite the world of portable, wireless devices under a single standard. Why name a modern technology after an obscure Danish king? Here's a clue: two of the most important companies backing the Bluetooth standard—L.M. Ericsson and Nokia Corp.—are Scandinavian.

But all is not so rosy in the Bluetooth kingdom these days. The promises of a Bluetooth-united world have become stuck in the mud of unfounded hyperbole, diminished expectations, and security loopholes. It's the last of those concerns that has the Bluetooth community reeling, as one security breach after another has appeared and been duly exploited. For our purposes, these so-called Bluetooth cavities have generated a pleasing vocabulary of new words and phrases to name and describe them.

In February 2004's Technically Speaking, I told you about the practice of bluejacking: temporarily hijacking another person's cellphone by sending it an anonymous text message using the Bluetooth wireless networking system. In a world where the only sure things are death, taxes, and spam, it won't surprise you one bit that people have bluejacked nearby devices to send them unsolicited commercial messages, a practice called, inevitably, bluespamming. (In a recent survey by the British public relations firm Rainier PR, 82 percent of respondents agreed that spam sent to their mobile phones would be "unacceptable." My question is: who are the 18 percent who apparently would find it acceptable?)

In that February column, I also told you about warchalking, using chalk to place a special symbol on a sidewalk or other surface that indicates a nearby wireless network, especially one that offers Internet access. Now black-hat hackers are wandering around neighborhoods looking for vulnerable Bluetooth devices. (Randomly searching for hackable Bluetooth devices is called bluestumbling; generating an inventory of the available services on the devices—such as voice or fax capabilities—is called bluebrowsing.) When they find them, they're chalking the Bluetooth symbol (the Nordic runes for the letters H and B, for Harald Bluetooth) on the sidewalk, a practice known as bluechalking.

Bluetooth crackers have recently learned to exploit problems in the Object Exchange (OBEX) Protocol, used to synchronize files between two nearby Bluetooth devices—a practice called pairing, which is a normal part of the Bluetooth connection process, but in this case it's done without the other person's permission. Once pairing is achieved, the crackers can copy the person's e-mail messages, calendar, and so on. This is known as bluesnarfing, and the perpetrators are called bluesnarfers. (The verb to snarf means to grab or snatch something, particularly without permission. It has been in the language since about the 1960s.)

A different Bluetooth security breach enables miscreants to perform bluebugging. This lets them not only read data on a Bluetooth-enabled cellphone but also eavesdrop on conversations and even send executable commands to the phone to initiate phone calls, send text messages, connect to the Internet, and more.

In the harmless-but-creepy department, the unique hardware address assigned to each Bluetooth device provides the impetus behind bluetracking, which is tracking people's whereabouts by following the signal of their Bluetooth devices. (Why anyone would want to do this remains a mystery, but most if not all of these hacks are forged by people who clearly have way too much time on their hands.)

Perhaps the weirdest of the recent Bluetooth hacks is the BlueSniper, a Bluetooth scanning device that looks like a sniper rifle with an antenna instead of a barrel. Point the BlueSniper in any direction and it picks up the signals of vulnerable devices up to a kilometer away (compared with the usual Bluetooth scanning distance of 10 meters). And, of course, the BlueSniper also lets you attack those distant devices with your favorite Bluetooth hack.

Not all recent Bluetooth developments have been security lapses. In 2004, the news wires and blogs were all aflutter over a new British phenomenon called toothing. Allegedly, complete strangers had been using their Bluetooth phones or PDAs to look for nearby Bluetooth-enabled devices and then sending out flirtatious text messages that supposedly led to furtive sexual encounters. Outrageous? Yes. True? Nope. The whole thing turned out to be a hoax.

Will all the negative stories lead to a Bluetooth backlash? Proponents of the networking standard say no, since the way to avoid almost all Bluetooth security hacks is to set up your device so that it's not discoverable—that is, it's not available to connect with other devices. In other words, the future of the Bluetooth standard may rest on a simple time-honored principle: "Just say no."

Securing Your Laptop

I’m a paranoid computer user. The first thing I do with a PC is install a full suite of “anti-” software programs—antivirus, antispy, antispam, you name it. I even leave Microsoft Vista’s “Annoy me constantly” mode turned on. So when I got a browser virus anyway, I lost my faith in my software security shields.

Just in time, along came the Yoggie Gatekeeper Pico. It’s a USB stick that bills itself as a replacement for all the security software we ordinarily run under Windows, designed with laptops in mind. All network traffic, wired or wireless, goes through the Pico before any Windows software sees it. And because the Pico is itself a complete computer, running Linux on an Intel XScale processor, it promises to bump up performance by supplanting the security software that now sucks cycles from your laptop’s central processing unit.

That claim got my attention, because I both design and play games, and what gamer doesn’t crave better performance? The price seemed right, too—I found it for US $149 up front and $30 a year for automatic updates (including, for example, new virus profiles), with the first year’s updates free.

Installation is supposed to be straightforward: just insert the Pico and install a driver from a CD. However, it didn’t work that way for me. The CD’s installer wouldn’t run on its own, so I ran it from Windows Explorer. Then, when the program launched Internet Explorer to register my Pico on Yoggie’s Web site, the browser reported an invalid site certificate—not a good sign for a security product.

"Mother of All Quantum Networks" Unveiled in Vienna

22 October 2008—Vienna has been the backdrop of some major milestones in the new science of quantum cryptography, and on 8 October, the city made its mark again. Scientists there have booted up the world’s largest, most complex quantum-information network, in which transmitted data is encoded as the quantum properties of photons, theoretically making the information impervious to eavesdropping.

22 October 2008—Vienna has been the backdrop of some major milestones in the new science of quantum cryptography, and on 8 October, the city made its mark again. Scientists there have booted up the world’s largest, most complex quantum-information network, in which transmitted data is encoded as the quantum properties of photons, theoretically making the information impervious to eavesdropping.

Built at a cost of 11.4 million euros, the network spans approximately 200 kilometers, connecting six locations in Vienna and the neighboring town of St. Poelten, and has eight intermediary links that range between 6 and 82 km. The new network demonstrated a first for the technology—interoperability among several different quantum-cryptography schemes. The project, which took about six months longer to complete than planned, was so complex that some wags are calling the Viennese network “the mother of all quantum networks.”

Since 1984, when the concept was put forward by Charles Bennett of IBM and Gilles Brassard of the University of Montreal, quantum cryptography has seemed likely to become the ultimate unbreakable code. A fundamental tenet of quantum mechanics is that measuring a quantum system changes the nature of that quantum system. This makes data encoded as the quantum characteristics of particles—such as the polarization of photons—ideal for secure communication between two parties. According to convention in cryptography, the communicating parties are called Alice and Bob, while the eavesdropper is called Eve. Let’s say that Alice and Bob are using the polarization of photons for an encrypted exchange over a quantum channel. If Eve eavesdrops along the route, she can’t help but change the polarization of some of the photons, so Bob will always detect her.

Quantum communication devices do not communicate a message but are used instead to securely generate and distribute cryptographic keys—data needed to encode or decode a message. There are at least three companies marketing quantum-key distribution devices today.

Each of the individual technologies used to build the Viennese network—including quantum cryptography through optical fiber and quantum communication through the air— had been demonstrated before, but no one had been able to tie the various techniques together.

Project manager Christian Monyk, of the Austrian Research Centers, says that if a worldwide quantum-information network is to exist someday, it must be able to deal with various technology preferences that different users choose, just as a telecommunications network does today.

“This is something new in quantum cryptography,” said Nicolas Gisin of the University of Geneva, who worked on quantum-key distribution over the network. “The network aspect was the most important aspect. This is not a trivial network, as every quantum system can speak to the other ones.”

Gisin says that the various quantum-cryptography techniques have different strengths and weaknesses, so it became necessary to establish a certain minimum standard. This was done by setting specifications for error correction, bit rates, and response times and by developing software to ensure that the standards were met.

Conceptually, networks are built in layers, with the physical layer—the quantum-cryptography links, in this case—as the bottom layer and other parts, such as the functions that route messages, atop that layer. The hardest part of the project, according to Norbert Lütkenhaus of the University of Waterloo, in Canada, a theorist who worked on the security of the network, was adding layers atop the quantum cryptography while maintaining the security level of the cryptography.

In the network’s first demonstration, its designers showed that if one quantum link broke down, messages were securely rerouted without disruption using other nodes and links, much like what happens in an ordinary data network today.

The European Union–funded project, called SECOQC, which stands for “secure communication based on quantum cryptography,” was the culmination of four and a half years of effort. The project involved more than a dozen groups of researchers and industrial partners, including Siemens, which provided the standard commercially available optical fiber for the network, and ID Quantique, one of the producers of quantum-key distribution machines.

The next step, says Monyk, is “to try to install a virtual private quantum network in a network belonging to a telecom operator.”

Commercializing Quantum Keys

It’s a strange business, turning the esoteric quantum properties of light into money. But there are a few brave companies that have been trying to do just that for the last five years, and they may have hit on the right way to do it. What these firms, ID Quantique, MagiQ, and SmartQuantum, are trying to sell is a way of distributing a cryptographic key that is theoretically theft-proof, because it relies on the quirky quantum physics of photons. Such a “quantum key” distribution system could allow entities with secrets—banks, large technology firms, governments, and militaries—to encode and decode their data for transmission over optical fiber.

In the hopes of finally gaining customers, the companies involved have retooled their wares. Some are tying their quantum key distribution technology to high-bandwidth commercial devices that can use the keys to encrypt data. And some are looking to redesign their systems so that they can be integrated into telecom networks to make it more attractive for big carriers to offer quantum encrypted lines to their customers.

Quantum key distribution lets two computers generate a key between them by taking advantage of a quantum property of photons—the fact that a characteristic such as phase or polarization cannot be measured without changing it. A quantum key can be generated by transmitting a series of bits encoded using one or a few photons per bit and two types of polarization filters.

The bit the photon represents can be accurately read only by using the right filter. Use the wrong filter, and you change the bit. An interloper won’t know which filter the encoder used even though the sender and receiver can share that information. So the would-be thief can’t just insert himself between the sender and the receiver to read the bits, and any attempt he makes to do so will be easily noticed. Making it even more difficult for such data thieves, the systems these companies have developed commonly generate a new key about once a second.

ID Quantique, a spin-off of the University of Geneva, debuted the first commercial key distributor in 2002, followed quickly by MagiQ Technologies of New York City. By 2004 those two were joined by a French start-up, SmartQuantum, in Lannion. Big firms such as Mitsubishi, NEC, NTT, and Toshiba have been researching such systems as well.

What customers really wanted, says ID Quantique CEO Grégoire Ribordy, was not just a key distributor but an integrated system that could both distribute the keys and do the data encryption at gigabit-per-second rates—a hybrid of quantum and classical encryption machines. All three firms initially focused on developing such devices in-house.

ID Quantique built a 100-megabit-per-second device that distributed keys on one fiber and transmitted encrypted data on a second, and MagiQ produced one that operates at 2 gigabits per second. Meanwhile, SmartQuantum built a 2-Gb/s device that did both the key distribution and the encrypted data transfer on the same fiber.

But it has proved too difficult for small start-ups to get such a system on the market quickly enough to compete with more established firms selling standard high-bandwidth encryptors. Before customers will accept a new encryptor, it must pass a certification process that can take two or three years, says SmartQuantum’s commercialization and marketing director, François Guignot. “In the short term, we do not have the knowledge to develop a fully certified classical encryption system,” he says.

So ID Quantique and SmartQuantum have shifted gears. Instead of focusing on building their own encryption systems, they are partnering with classical encryption providers to integrate quantum key distribution into established products. In January, ID Quantique announced an arrangement with Melbourne, Australia–based data security firm Senetas Corp. that gave birth to a 1-Gb/s hybrid. In a hybrid, a single key distributor can serve multiple encryptors. “When your bandwidth requirements grow, you can add 1-gigabit-per-second encryptors,” says Ribordy. SmartQuantum is getting a similar integration project under way, using classical encryptors from two companies, which Guignot would not name.

For ID Quantique, integrating classical and quantum cryptography involved two steps. One was to develop a secure way of transferring the key from the quantum device to the classical one. The other was to come up with protocols for handling errors in the transmission and for synchronizing the two types of devices.

MagiQ, on the other hand, took a different path. It built its own integrated device through a partnership with Cavium Networks, in Mountain View, Calif., a maker of encryption/decryption microprocessors. Its 2-Gb/s product is scheduled for certification by the U.S. National Institute of Standards and Technology in 2007. And the company is pressing ahead on the bandwidth front, with an 8-Gb/s device due for production this month.

Even if their products are ready for the market, the market may not be ready for them. Banks, prime targets of ID Quantique, have only recently warmed to the idea of encrypting their data while it’s in transit, let alone using a new technology to do so.

Still, SmartQuantum’s Guignot believes that there could be a ¤300 million market by 2009 for quantum cryptography companies, but only if they convince telecom providers to make sales for them. Say a bank wants to securely link its London and Paris offices. A telecom company would install hybrid encryptors within the telecom network. Then the provider could lease the bank a hybrid encryptor and an optical-fiber connection to the network, giving the bank essentially impenetrable encryption along the entire path. “This would be a premium product,” says MagiQ CEO Robert Gelfond. He thinks providers could charge up to 30 percent more for a line like that.

The one hitch is that because commercial quantum key distribution works only over a maximum distance of 100 to 140 kilometers of fiber, the telecom provider might have to link several key distributors end to end within its network and guarantee that no one can gain access to the connection points. MagiQ has already taken the first step along this path. A year ago, it collaborated with U.S. carrier Verizon to demonstrate key distribution and data encryption over two linked 80-kilometer spans.

Cryptographers Take On Quantum Computers

Software updates, e‑mail, online banking, and the entire realm of public-key cryptography and digital signatures rely on just two cryptography schemes to keep them secure—RSA and elliptic-curve cryptography (ECC). They are exceedingly impractical for today’s computers to crack, but if a quantum computer is ever built—which some predict could happen as soon as 10 years from now—it would be powerful enough to break both codes. Cryptographers are starting to take the threat seriously, and last fall many of them gathered at the PQCrypto conference, in Cincinnati, to examine the alternatives.

Any replacement will have some big shoes to fill. RSA is used for most of our public-key cryptography systems, where a message is encoded with a publicly available key and must be decrypted with a mathematically related secret key. ECC is used primarily for digital signatures, which are meant to prove that a message was actually sent by the claimed sender. “These two problems are like the two little legs on which the whole big body of digital signature and public-key cryptography stands,” says Johannes Buchmann, cochair of the conference and professor of computer science and mathematics at Technische Universität Darmstadt, in Germany.

The reason that quantum computers are such a threat to RSA and ECC is that such machines compute using quantum physics. Unlike a classical computer, in which a bit can represent either 1 or 0, in a quantum computer a bit can represent 1 or 0 or a mixture of the two at the same time, letting the computer perform many computations simultaneously. That would shorten the time needed to break a strong 1024-bit RSA code from billions of years to a matter of minutes, says Martin Novotný, assistant professor of electrical engineering at the Czech Technical University, in Prague.

But quantum computers don’t have an advantage over every type of cryptography scheme. Experts say there are four major candidates to replace RSA and ECC that would be immune to a quantum computer attack. One prominent possibility is an ECC digital-signature replacement known as a hash-based signature scheme. A hash function is an algorithm that transforms text into a relatively short string of bits, called the signature. Its security is based on being able to produce a unique signature for any given input. Even inputs that are only slightly different from one another should produce different hashes, says Buchmann.

Error-correcting codes are a potential replacement for public-key encryption, Buchmann says. Such a scheme would introduce errors into a message to make it unreadable. Only the intended receiver of the message would have the right code to correct the error and make the document readable.

Another potential type of public-key cryptography replacement is known as multivariate public-key cryptosystems (MPKC). To crack it, a machine must solve multivariable nonlinear equations. This type of cryptography is extremely efficient and much faster to produce than other schemes, such as RSA, which must use numbers hundreds of digits long to be secure, says Jintai Ding, mathematics professor at the University of Cincinnati and cochair of the cryptography conference. So MPKC could be particularly useful in certain applications like RFID chips. “RFID chips and sensors have very limited computing power and memory capacity,” says Ding, whose specialty is MPKC. “But they are also very important in practical applications, so they need to be secure,” he adds.

Cryptographers are also discussing a lattice-based system, where the lattice is a set of points in a many-dimensioned space. Possibly useful for both digital signatures and public-key cryptography, a lattice system would be cracked by finding the shortest distance between a given point in space and the lattice. Buchmann says lattice systems are promising but still need much more research.

For the time being, our cryptosystems are safe, yet both Ding and Buchmann caution that the need to develop alternatives is growing more urgent. As we build more-powerful classical computers, RSA and ECC must become more complex to compensate. In 10 or 20 years, we might need to base RSA on prime numbers thousands of digits long to keep our secrets. That’s long enough to bog down some computers and prompt a replacement, even if quantum computers turn out to be a dead end.

Wireless Broadband In a Box

Lack of need is, of course, one reason for the unimpressive numbers. Outside of playing interactive games, which is hardly a universal activity, no broadband "killer app" has yet emerged. Another reason is difficulty in getting service. For a variety of reasons, many would-be subscribers have been unable to get cable or digital subscriber line (DSL) service. For them, a fairly new type of technology known as non-line-of-sight (NLOS) wireless may be just what they need.

At first, NLOS wireless may not sound like a big deal. After all, ordinary radios and cellphones are non-line-of-sight devices. But they don't carry broadband data [see , "What is Broadband?"]. What makes the latest generation of NLOS wireless technology worth talking about and having is that it delivers data at high rates over substantial distances. Moreover, most implementations do so without the need for a visit from an installation technician.

This last point is crucial. Previous attempts to provide wireless Internet access to the home failed in large part because their installation costs were too high. For example, multichannel, multipoint distribution service (MMDS), which operates at 2.5 GHz, delivers high data rates over tens of kilometers. But first-generation systems required a directional antenna to be installed on the subscriber's premises within sight of a base station antenna.

Since service providers often find out that a potential location has no unimpeded view of the base station only when they send an installer to the site, the average successful installation takes more than one visit. With "truck rolls," as technician visits are known, costing anywhere from US $300 to $800, it could take more than a year's worth of subscriber revenue just to break even on the installation.

Worse, line-of-sight (LOS) systems do not scale well. When a base station's capacity reaches saturation, the usual procedure is to divide the coverage area into two pieces, use the existing antenna to serve half of it, and put up a new antenna to serve the other half. With directional antennas, that means that the antennas at roughly half the existing subscriber locations will have to be re-aimed at the new base station site, necessitating truck rolls that bring in no new revenue.

That's why communications companies—from household names like AT and T, Sprint, and WorldCom to newcomers like Winstar and Teligent, all of which rolled out broadband LOS wireless Internet access services, principally in the MMDS band—have pretty much given up on LOS wireless, at least insofar as price-sensitive residential customers are concerned. Last year, AT and T stopped service to 47 000 customers, Sprint and WorldCom slowed or halted their development, and both Teligent and Winstar filed for bankruptcy protection.

Enter NLOS. A number of technologists and investors believe that this relative newcomer can overcome the problems faced by existing line-of-sight wireless services. Briefly, the challenge they have to meet is to establish communication links with signal-to-noise ratios high enough to support broadband communications with easily installed, preferably indoor, antennas.

That goal may be achieved in several ways. Local-area networks, like those based on the popular IEEE 802.11b standard, do it by limiting the distance between transmitter and receiver. Cellphones operate over longer distances, but offer no broadband connectivity. LOS systems rely on a high-power transmitter at the base station, an unimpeded line of sight between transmitter and customer, and a highly directional outdoor antenna at the customer premises, all of which add up to a technology too expensive for the residential market.

NLOS attacks the problem with smart antennas, advanced modulation techniques, and, in some cases, a mesh architecture in which nodes—the individual routers on the customer's premises—are connected by multiple links [see , "figure"]. The mesh architecture helps keep signal strength up by replacing single, long radio links with multiple short ones.

Dave Beyer of Nokia's Wireless Routing Group smiles from behind an array of decorated wireless routers, which when mounted on subscribers' buildings will configure themselves into a mesh network.

Whereas LOS base stations use omnidirectional or sectorized antennas that spew energy over large areas, non-mesh NLOS systems (those built around a central tower) fit their towers with small antenna arrays that direct the energy where it is needed. The advanced modulation techniques like orthogonal frequency-division multiplexing (OFDM) use the available radio spectrum with great efficiency, maximizing the number of bits per second they transmit per hertz of spectrum bandwidth. OFDM does that by sending data over multiple carriers within a frequency band.

Players in the NLOS field include equipment manufacturers like Nokia Corp. (Espoo, Finland) and Navini Networks Inc. (Richardson, Texas); companies like Iospan Wireless Inc. (San Jose, Calif.), which provide transmitter and receiver designs and chips; and Internet service providers (ISPs) like Vista Broadband Networks Inc. (Petaluma, Calif.) and T-Speed (Dallas), which sell wireless access service to customers.

How NLOS systems work

The most important technology in a point-to-multipoint (non-mesh) NLOS system is its smart base-station antenna. Instead of a single omnidirectional or sectorized antenna, these systems use an array of radiating elements. Each element is fed a version of the signal to be transmitted that differs from the others only in its amplitude and phase (time delay). The signals radiated by the array elements combine with each other in space to form one or more beams of carefully calibrated strength propagating in specific directions. The directions are so chosen that the beams—after bouncing off assorted objects in the environment, like mountains, buildings, motor vehicles, and even aircraft—all reach the location of the intended subscriber at the same time and in phase with one another [see , "figure"]. When the beams combine constructively, the result is a strong signal at the receiver, which can therefore use an indoor antenna.

Sounds good, but how do they do it? The answer is by first monitoring signals received from the subscriber unit to determine the characteristics of the environment and then by generating a complementary signal. For example, if the subscriber unit has a simple omnidirectional whip antenna, the signal it transmits will, in general, undergo multipath distortion—that is, it will take multiple paths to the base station, bouncing off various objects, being attenuated to various degrees, and undergoing various delays, depending on the different path lengths.

Say the base station receives two signals, one from the north and, 2 us later, one from the east that is 8 dB weaker. Then the base station transmitter will format its signal into two beams, first a strong one to the east, and 2 us later, one 8 dB weaker to the north. Of course, since the environment is constantly changing, the base station must keep monitoring subscriber transmissions, analyzing them, and updating its picture of the environment. Small wonder, then, that this sort of technology could not even be considered for commercial applications until cheap and powerful digital signal processors became available.

Taking advantage of those processors, Navini Networks uses the technology in its Ripwave product line, versions of which operate in both the licensed and unlicensed bands in the vicinity of 2.5 GHz. According to Sai Subramanian, director of marketing and product line management, the base station antenna has eight elements, but is not very large because all eight elements are within a wavelength of each other, and at 2.5 GHz, a wavelength is just 120 mm.

Although Navini Networks' subscriber premises equipment has two antennas, they don't work together as a phased array. Rather, they provide spatial diversity: it is less likely that two antennas will simultaneously find themselves in a dead spot than it is for one antenna. Navini has several U.S. trials under way.

In another approach, Iospan Wireless uses two transmit antennas at the base station and three receivers at both ends of its links. Iospan's multiple antenna technology, which was developed by the company's founder, Arogyaswami Paulraj, professor and head of the Smart Antenna Research Group at Stanford University, is known as MIMO, for multiple-input, multiple-output.

Referring to multipath distortion, Asif Naseem, vice president of business operations, marketing, and business development for Iospan, says, "Multipath is our friend. In the best conditions, we get six separate data streams out of the frequency chunk [there are six paths between three receivers and two transmitters] and realize multiples on the user data rate. In our tests from our base station on the roof to a customer over 1.5 km away, we are measuring over 13 Mb/s downstream and 6 Mb/s up. At 6 km we get over 6 Mb/s down and 4 Mb/s up. This is usable capacity." Iospan's multiple antenna enhancements of the OFDM modulation technique are being standardized in the IEEE 802.16 Working Group on Broadband Wireless Access Standards. The company is aiming the price of customer premises equipment at less than $500, and has begun trial deployments with partners in the United States and internationally. (Iospan will rely on others to manufacture, market, and install its systems; it simply provides ASICs, software, and reference designs.)

Smart antennas aren't the only way to sustain signal strength over long distances. Another way is to break those long distances into a series of shorter hops, with the signal boosted every time it is relayed from one node to another. That's the key idea behind the mesh approach. Each customer's equipment acts both as a means of connecting that customer to the network and as a node through which communications traffic from other customers in the vicinity is boosted and rebroadcast on its way to and from the facilities of the service provider.

So far, at least three California companies are promoting their use of the mesh network approach: Nokia's Wireless Routing Group (Mountain View), SkyPilot Network (Belmont), and CoWave Networks (Fremont). SkyPilot, still in quiet start-up mode, is making equipment and providing service and seems to be focused on the residential and small business market using 802.11 standard technologies. In its plans, CoWave talks primarily about providing equipment that uses licensed bands for these markets.

Farther along is Nokia, which has been shipping its R240 Wireless Router for about a year now, with over 60 wireless ISPs deploying networks around the country. Nokia also operates a test network near its offices in Mountain View to test new network software releases. Its technology has been deployed by Vista Broadband Networks (Petaluma, Calif.) to more than a hundred customers just beyond the wine country north of San Francisco after a test of more than two years. Vista offers 384-kb/s symmetric service for $45 per month and 1-Mb/s symmetric service for $55 per month. Installation today costs $200.

In the Mountain View network, about 80 customers within three or four blocks are participating, said Dave Beyer, head of Nokia Wireless Routing. Indeed, walking with Beyer around the test neighborhood, one of the authors (Schrick) could easily see many units mounted on buildings and houses [see , "photo"]. These are the wireless routers that make up most of the customer premises equipment needed for the system. These routers accept not only the signals destined for their locations but others as well, which they boost and rebroadcast.

Router density is crucial, because, according to Donald Cox, a professor who leads Stanford University's Wireless Communications Research Group, maintaining an adequate signal-to-noise ratio would require a service provider to install a transceiver base station every 50 meters, a proposition that would appear to be prohibitively expensive. After all, the main reason that Ricochet, an early wireless Internet access provider, for example, went out of business was the high cost of deploying its network. But unlike Ricochet, Nokia's mesh approach makes the customer equipment part of the network and eliminates the need to rent space for each and every node. Nokia's Beyer estimates that the price of the wireless router and associated equipment for the home or office is about $800 in small quantities.

In addition to individual subscriber's wireless routers, Nokia's system uses base stations called AirHeads, each of which serves an area called an AirHood. The subscriber units and AirHeads are roof-mounted, but have no need for careful antenna siting and aiming.

Still, some criticism of the mesh architecture is common, Beyer notes, because it can increase the number of hops that a packet must take in both directions. But clearly, keeping hop lengths short keeps signal strength up, which allows higher data rates. This, Beyer claims, makes the AirHood and other mesh architectures much faster overall than distribution from a central point.

One concern with mesh networks is that the extra hops add latency, which can be a problem when carrying real-time interactive traffic like voice. Although each hop does indeed add some latency, Beyer says that a quality-of-service software upgrade to be released later this yearwill make high-quality voice communications possible for subscribers within three hops of the nearest AirHead.

Right now Nokia is focusing its wireless routers and system on North America in the industrial, scientific, and medical (ISM) public band. Europe and Asia are also potential markets, but Beyer says that even though the 2.4-GHz band is also public in those markets, the limits on radiated power are much stricter there, at 100 mW of effective radiated power compared with 4 W in North America. This makes a huge difference in range and coverage. European and Asian authorities have been petitioned to increase these power limits, and if they do, Nokia will reexamine those markets.

The new wireless technologies face substantial technical and commercial challenges [see , "Grass-Roots Wireless Networks"]. Some of these broadband systems use regions of the spectrum that have, in the past two decades, been opened for public and private use. Equipment working in the public regions must coexist not only with similar equipment but also with completely different gear—microwave ovens, for example. The licensed bands, of course, do not face this uncertainty.

The well-publicized Bluetooth standard, now enshrined in part as IEEE Standard 802.15, lives in the ISM band, which is also where most 802.11 equipment works. If Bluetooth becomes prevalent and at the same time the use of 2.4-GHz cordless phones continues to grow, that band may become too crowded to support reliable service. Another consideration is that in the public spectrum, power must be kept low because of interference concerns. In the licensed parts, safety determines the permissible power levels, which results in higher limits.

Will they come?

What if you built a network and nobody came? The February 2002 FCC report also cited a survey from the Strategis Group (Washington, D.C.) that found that only 12 percent of on-line customers were willing to pay $40 per month for high-speed access, a number that rose to only 30 percent when the price was dropped to $25 per month.

Another survey, from Hart Research and The Winston Group (Washington, D.C.), quoted in a November 2001 Communications Daily article, found a similar mood, indicating that 36 percent of then-current dial-up users were interested in faster access, but not at current prices, which are well over $40 per month and rising. In other words, these surveys found no additional demand for high-speed at today's prices.

Many experts believe that compelling new services will be needed before demand grows enough to drive broadband access to most households [see "Third-Generation Wireless Must Wait For Services to Catch Up," pp. 14-16]. Maha Achour, president and chief technical officer of Ulmtech (San Diego, Calif.) and a broadband wireless and fiber-optic communications expert, believes that what is needed to increase demand for broadband services is not games, as many claim, but learning, collaboration, and conferencing. "I think that distance learning and collaboration will be the killer applications—I strongly believe in on-line learning," she emphasizes.

Nevertheless, the numbers seem to indicate that we have a way to go before serious demand for high-speed Internet access develops among wireless users. Several more corporate flameouts may occur before we get the speedy, reliable, and safe services that new wireless network technologies promise.

Antennas for the New Airwaves

Let’s say you’ve gone and bought a high-definition LCD TV that’s as big as your outstretched arms. And perhaps you’ve also splurged on a 7.1 channel surround sound system, and an upconverting DVD player or maybe a sleek Blu-ray player. Maybe you’ve got a state-of-the-art game console or Apple TV or some other Web-based feed. Well, come 17 February, you just might want one more thing: a new antenna on your roof.

If you live in the United States and you’re one of the 19 million people who still prefer to pull their TV signals out of the air rather than pay a cable company to deliver them, you may already know that this month the vast majority of analog television broadcasts in the United States are scheduled to end and most free, over-the-air TV signals will be transmitted only in the new digital Advanced Television Systems Committee (ATSC) format. A massive advertising campaign is now telling people who get their signals from the ether that they’ll need a TV with a built-in ATSC tuner or a digital converter box to display their favorite programs.

What the ads don’t mention is that most of those people will also need a new antenna. For the vast majority of you out there in Broadcast-TV Land, the quality of what you see—or even whether you get a picture at all—will depend not on your TV or converter box but on the antenna that brings the signal to them.

If you have a cable or satellite hookup, you might think that this antenna issue is irrelevant—but think again. Some owners of high-end systems complain that the signals coming from their satellite or cable provider aren’t giving them the picture quality they expected. That’s because cable and satellite operators often use lossy compression algorithms to squeeze more channels, particularly local channels, into their allotted bandwidth. This compression often results in a picture with less detail than the corresponding terrestrial broadcast signal provides. For videophiles who have already spent a fortune on their home-theater systems, a couple of hundred dollars more for a top-of-the-line antenna obviously makes sense. And of course, antennas are also good backup for the times when the cable gets cut or the satellite system fades out due to rain or snow. In addition, they serve second TV sets in houses not wired to distribute signals to every room.

Suddenly the dowdy TV antenna, a piece of technology that has changed little over the past 30 years, is about to be the belle of the ball.

Gone, however, are the days when a large rooftop antenna was a status symbol. Cellphones and handheld GPS units have conditioned consumers to expect reliable wireless services in very small packages. Such dramatic changes in consumer preferences—coupled with the new frequency allocations, channel distributions, and high demand for reliable over-the-air digital antennas—mean that the time for new designs has indeed come.

Most TV viewers think of antennas as simple devices, but you’re tech savvy, so of course you’d never assume this. Nevertheless, bear with us briefly as we review Antennas 101.

The decades-old designs of most TV antennas on rooftops—and in the market today—are typically configured on a horizontal fish bone, with arms of varying lengths to handle a broad range of frequencies.Though the engineering of antennas in other spheres has advanced radically over the years, manufacturers of television equipment have stuck pretty much with the old designs for economic reasons. Traditional antennas were good enough for analog television, and the shrinking customer base for broadcast reception didn’t offer much incentive to plow money into new designs.

The transition to digital has changed all that. Most digital channels are broadcast in UHF, and UHF antennas are smaller than those used for analog TV, where most broadcast signals were VHF. Also, the multipath problem, arising from signals that reflect off buildings and hills, which may have occasionally caused ghosting on analog TVs, can completely destroy a digital picture.

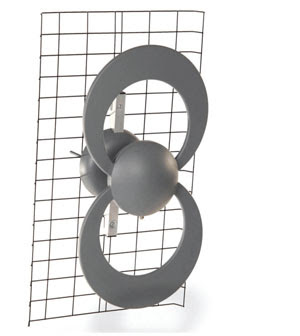

TV TOPPER: Introduced by Antiference, of Coleshill, England, in 2001, the Silver Sensor is an adaptation of the classic design of years past—a log-periodic array of horizontal receiving elements. Versions of the Silver Sensor are sold by LG Electronics under its Zenith brand and by Philips (shown here).

A few designers and manufacturers have done the necessary research and development and introduced improved models. First out of the labs were the Silver Sensor, introduced by Antiference, based in Coleshill, England, in 2001, and the SquareShooter, introduced in 2004 by Winegard, in Burlington, Iowa.

The Silver Sensor [see “TV Topper”], an indoor antenna designed for UHF reception, is based on the classic log-periodic design, which means that the electrical properties repeat periodically with the logarithm of frequency. Done right, a log-periodic design offers good performance over a wide band of frequencies.

UNDER THE HOOD: The Winegard SquareShooter consists of a two-arm sinuous element and grid reflector attached to an inexpensive Mylar substrate and encased in a plastic dome. The element responds across a broad range of frequencies; its size is optimal for the UHF band. The open grid behind it is sized to reflect UHF frequencies, which reduces the multipath problem by blocking some of the signals that bounce off buildings or hills instead of coming directly to the antenna.

The outdoor SquareShooter’s element has a sinuous shape, which helps it respond across a broad UHF range of frequencies [see “Under the Hood”]. It’s mounted in front of an open grid that reflects UHF waves, thereby reducing the multipath problem by blocking signals arriving from behind the antenna.

Still, the pace of product introductions is slow. To this day, some manufacturers are still relabeling old designs as HDTV antennas as long as they generally cover the right part of the spectrum. But some very good designs are finally on the market—if you know what to look for.

Antennas are much better now than they used to be.But two changes will make them even better in the next several years: the introduction of powerful new software tools for designing antennas, and a slew of new regulations in the United States that will reduce the range of frequencies that a TV antenna must receive. Together, these factors will lead the way to smaller antennas [see “Loop de Loop”] that work better and look better than the apparatus that sprouted from the roof of your grandparents’ house 50 years ago.

LOOP DE LOOP: The new ClearStream2, from Antennas Direct, uses thicker-than-traditional elements and tapers the thickness of the loops, which allows the antenna to respond to a greater range of frequencies. The resulting tapered-loop antenna is half the volume of the equivalently performing bow-tie array that has been on the market for years. As with the SquareShooter, the grid acts to defend the antenna against multipath interference.

The key developments that have changed antenna design are computational electromagnetic codes, advanced search-and-optimization methods like genetic algorithms, and improved measurement tools such as the vector network analyzer. Traditionally, antenna designers used pencil and paper to wrestle with Maxwell’s equations, the four equations that describe electric and magnetic fields. Then engineers spent enormous amounts of time in the laboratory, testing and tweaking designs. Computational electromagnetic codes, a breed of program that solves Maxwell’s equations on a computer, have revolutionized antenna design by allowing the engineer to simulate the real-world electromagnetic behavior of an antenna before it is built.

As computer power increased in the 1990s, antenna engineers began using automatic search-and-optimization methods to sort through the successive designs their codes generated. In particular, they used genetic algorithms, which emulate the Darwinian principle of natural selection through a survival-of-the-fittest approach. After sifting through millions of possible design configurations, the algorithm can zoom in on a handful of promising optimal designs that meet specified performance and size criteria.

Thanks to these tools, antenna designers can focus more on the antenna itself and less on the math. And the months that used to be spent testing prototypes are now compressed into days—or even just hours—of simulation. Designers now go to the laboratory only for a final check, to confirm the accuracy of their computations.

And in the lab, life is a lot easier today than it was several decades ago. To check antenna performance, engineers have to measure its impedance accurately across a huge frequency range. That is what a modern vector network analyzer now lets them do easily and quickly. Decades ago, engineers had to calculate, for each channel’s frequency, the impedance from measurements of voltage signals in the cable leading to the antenna. This was a laborious and often imprecise process.

Regulations that reduce the range of frequencies a television must receive are driving big changes in antenna design. The new regulations relax the other two main design constraints, gain and size, making it possible for smaller and far less conspicuous antennas than the monstrosities of yore.

These changes are part of a frequency reallocation that has turned the various parties claiming pieces of the radio spectrum into players in a game of musical chairs. In the analog television world, TV broadcasts occupy three bands. The low-VHF band covers 54 to 88 megahertz, the high-VHF band covers 174 to 216 MHz, and the UHF band covers 470 to 806 MHz. Earlier the UHF band stretched all the way to 890 MHz, but some of this bandwidth was given up in the 1980s to provide spectrum for cellphone communications. The two VHF bands will be retained in the transition to digital TV, but the ceiling of the UHF band will be reduced to 698 MHz, making room for new wireless services and for applications involving homeland security. This is 108 MHz narrower than the current UHF TV allocations and 192 MHz narrower than the older allocations, which extended out to channel 83.

Notably, the older and wider UHF bands were in effect when most of the TV antennas on the market today were designed. The old designs are therefore rarely optimal for the new spectrum allocations because the antennas had to cover the wider bands and higher frequencies.

The transition to digital has also changed how the stations are distributed across the allocated bands. In the days of analog, most TV stations were on the VHF band, with smaller, less powerful stations in the UHF band. But now, in the fast-approaching digital world, roughly 74 percent of the stations are on the UHF band and 25 percent are on the high-VHF band (today’s channels 7 through 13). Only about 1 percent of the stations will be in the low-VHF band (channels 2 through 6).

Gain is not the be-all and end-all of antenna design—far from it, as any antenna engineer will tell you. But you’d be hard-pressed to know that if spec sheets and marketing hype are your main sources of information.

Gain, usually expressed in decibels, indicates how well the antenna focuses energy from a particular direction as compared with a standard reference antenna. Because most spec sheets don’t give a two- or three-dimensional radiation plot, the gain number specified is the value in the direction of maximum intensity. What that means is that if the broadcast stations you’re trying to receive do not all line up like points on a single straight line from your home, you could have a problem with an antenna whose gain drops off dramatically from that sweet spot. Also, “gain” in this usage doesn’t include losses from impedance mismatch. In practice, the antenna’s performance will degrade if its impedance is different from that of the cable connected to it.

For these gain calculations, the reference antenna is often a half-wave dipole antenna, the most common type of antenna, which is composed of two metal rods, each one-quarter the length of the signal wavelength. The signal is taken from the antenna through a connection between the two conductors. The classic TV antenna is a log-periodic array of dipoles, with each dipole receiving a different VHF or UHF frequency.

When shopping for an antenna, consider the gain, but not to the exclusion of all other characteristics. Buying an antenna based on gain and price alone would be like going shopping for an automobile and considering only power and price; you might end up with a 500‑horsepower engine attached to a skateboard. While higher values of gain—in the 7- to 12‑decibel range—are usually better than lower values, most consumers will be better off not focusing on gain but instead purchasing a unit that provides good overall performance, as long as it meets their reception and installation requirements [see “The New Antennas: A Sampling”].

A family in rural Nebraska, for instance, might need a large, highly directional antenna, a tower of about 18 meters (60 feet), and a preamplifier to pick up stations, which would more than likely be located somewhere over the horizon. But that would be complete overkill for somebody living in Salt Lake City, where all the broadcast towers are on a mountain ridge just above the city with a line-of-sight path between most viewers and the tower. Anywhere near Salt Lake City you’ll get great reception even with a small indoor UHF antenna.

Don’t be fooled by claims of astoundingly high gain. Some manufacturers are marketing small indoor antennas and labeling the boxes with gain numbers between 30 and 55 dB. This kind of unit is actually an antenna paired with an amplifier, and the gain value stated on the package is really the gain of the amplifier and not that of the antenna. While it is possible to improve reception by using a well-designed low-noise amplifier, most of the inexpensive antennas designed this way actually have cheap amplifiers and too much gain. That combination generally overloads the amplifier—and potentially the receiver as well—causing signal distortion that can degrade or eliminate DTV reception entirely. Most consumers are better off with a well-designed nonamplified unit, also known as a passive antenna. If television reception does require an amplifier, the best choice is a high-quality, low-noise model connected as close as possible to the antenna.

Consumers switching to digital TVusing just an indoor antenna for reception face a more difficult problem. What was good enough to provide a watchable, if slightly snowy, analog broadcast is likely to bring nothing but a blank screen in the digital world. Even the better antennas must sometimes be readjusted to receive certain channels, forcing viewers out of their easy chairs to fiddle with their antennas.

But couch potatoes might soon be able to stay planted, thanks to a new standard approved by the Consumer Electronics Association (CEA) in 2008. The ANSI/CEA-909A Antenna Control Interface standard allows the television receiver to communicate with the antenna, instructing it to adjust and automatically lock onto the signal as the viewer channel surfs. Because the antenna and the receiver both “know” what channel the user is watching, the antenna can change either physically or electrically to adjust tuning, direction, amplifier gain, or polarization. The ability to adjust antenna tuning for each channel allows engineers to use a narrowband antenna element to cover a wide range of frequencies. This strategy is often referred to as tunable bandwidth. A simple example of tunable bandwidth is to use a switch to connect two short rods into a larger rod, thus reducing the resonant frequency.

Tunable bandwidth relaxes many of the design compromises, so manufacturers can produce smaller, higher-performance antennas. This is particularly important for indoor antennas, where compactness and aesthetics are key to adoption.

Not only will ANSI/CEA-909A eliminate having to fool with the antenna all the time, it should make it easier for engineers to design the high-performance, aesthetically pleasing small antennas that everyone wants. Audiovox Corp., of Hauppauge, N.Y.; Broadcom Corp., of Irvine, Calif.; and Funai Electric Co., of Daito, Japan, have demonstrated 909A-compliant smart antennas, but these designs have yet to be widely distributed because hardly any TV receivers on the market are compatible with them.

That might soon change. The National Association of Broadcasters (NAB)—the trade association that represents broadcast TV stations—and others are doing their best to stimulate a market for 909A-enabled antennas and receivers by promoting the new smart antennas. The NAB is particularly interested in the 909A technology because difficulty in adjusting the antenna was one of the factors that drove millions of consumers to cable or satellite in years past. In 2008, the NAB funded Antennas Direct, the company that one of us (Schneider) founded, to develop 909A-compliant smart antennas because without such a device on the market, television manufacturers would have no compelling reason to add the required interface circuitry to television tuners.

To help encourage manufacturers to do so, the July 2007 draft of the 909A standard made a change in the original specification. In the original design, the signals to the antenna went over a dedicated cable—that meant another jack in the back of TVs and another cable for consumers already struggling with a tangle of wires. The revised standard allows signals to be sent over the same coaxial cable that transmits the television signal. The single-cable solution should spur television manufacturers to adopt the standard, many being reluctant to include additional connection ports on the already crowded rear panel of a modern flat-panel TV. It will also simplify the connections for technology-challenged consumers.

With more free content, superior picture quality, and viable indoor antenna options coming soon, the broadcasters may finally be in a position to—dare we say it?—start stealing viewers back from cable and satellite.

And perhaps strangest of all, an antenna on the rooftop or perched in the living room may once again become a status symbol, as it was in the early days of television. It won’t be showing off that you’re rich enough to have a TV—just smart enough to get the most out of it.

To Probe Further

The CEA and NAB provide a Web site (http://www.antennaweb.org) to help consumers learn about the transition to digital television. The Web site has tools that indicate what channels are available in specific locations and how to select an antenna to receive them. Antennas Direct hosts a similar site (http://www.antennapoint.com), which also maps transmitter locations and gives distances and bearings relative to any specified location in the United States. Both sites offer advice on particular antennas and how to install them for best results.

JOHN ROSS & RICHARD SCHNEIDER explain why this month’s planned conversion to all-digital television broadcasts in the United States has sparked a revolution in antenna design. Ross, an IEEE senior member and coauthor of “Antennas for the New Airwaves” [p. 44], consults on antenna design and RF electromagnetics. His home antenna is an old prototype ClearStream1 that sits on a bookshelf and receives 24 digital stations from the Salt Lake City area. Coauthor Schneider, president and founder of St. Louis–based Antennas Direct, uses a ClearStream4 antenna mounted on his roof to receive over 20 stations, including a half dozen from Columbia, Mo., some 160 kilometers away.

Wi-Fi Hotspot Networks Sprout Like Mushrooms

Wi-Fi communication cards for devices like laptops are selling at an estimated 1-1.5 million per month, and most city centers offer scores of opportunities for people carrying equipment outfitted with the cards to access IEEE 802.11b networks. In the central part of Manhattan alone, in just 90 minutes, participants at a recent hackers' convention were able to detect some 450 such networks. Picking up on a tradition once practiced by wandering hobos during the Great Depression, data-hungry itinerants have taken to marking sidewalks to flag opportunities to hitch a ride in nearby corporate 802.11 networks.

It's an annoyance for businesses attempting to secure already busy local networks against intruders, and one that's being addressed by evolving IEEE 802.11 standards [see , "The ABCs of IEEE 802.11"].But in an otherwise struggling communications sector, it also could be a gold mine—if only service providers can figure out ways of standardizing access to public local-area networks across wider areas and obtaining revenues for services offered over those networks.

With just such prospects in mind, U.S. industry heavyweights, including IBM, Intel, AT and T Wireless Services, and Verizon Communications, disclosed in June that they may soon launch a company—code-named Project Rainbow—to provide a national Wi-Fi service for business travelers.

While Rainbow may be the biggest project of its kind in the United States, it's just the latest of many. Boingo Wireless Inc., iPass Inc., and Sputnik Inc. are among the new wireless Internet providers selling services that let customers use wireless access points around the country.

Europe—the new frontier?

It is in Europe, however, where the creation of transregional Wi-Fi networks may be taking off the fastest and where opportunities and challenges are coming into sharpest relief. To be sure, IEEE 802.11b has prompted some serious concern about its potential impact on third-generation mobile technologies. The Europeans have championed 3G cellular telephony in global standards organizations, as a successor to its hugely successful Global System for Mobile Communications (GSM).

Yet Wi-Fi also stands to benefit from the fact that GSM systems are found everywhere in Europe, which creates opportunities for synergies, and from the various kinds of cards Europeans are accustomed to using to make their phone systems work to best effect. With wireless connections and cards for user authentication and billing, European providers are in a position to offer secure IEEE 802.11 roaming on a continental scale.

In July, TDC Mobile, the mobile arm of Danish incumbent telephone company Tele Danmark, signed a contract with Erics-son to deploy a Wi-Fi system to link with GSM. The Danish operator plans to integrate an IEEE 802.11 service into its GSM network, using mobile phones and their subscriber identification module (SIM) cards to deliver passwords that control access. (All GSM phones contain SIM cards, which include the user's phone number, account information, phone directories, and so on.)